DeepSeek R1 shocked the world final week as its builders defined they may prepare an open-source reasoning AI pretty much as good as ChatGPT o1 at a fraction of the fee. Instantly, anybody might create highly effective AI fashions with the precise mixture of software program improvements and first rate {hardware}. The DeepSeek analysis implied that the main target wouldn’t be on high-end {hardware} anymore, and this tanked get AI tech shares like NVIDIA.

As we now know, nevertheless, that’s not precisely the case. The DeepSeek software program improvements, as attention-grabbing as they could be, don’t inform the entire story. Within the days following the R1 launch, we realized that DeepSeek may need used ChatGPT solutions to coach its AI. OpenAI accused the Chinese language firm of distilling its ChatGPT fashions themselves. Individually, we noticed oblique proof that means DeepSeek is certainly based mostly on outputs from ChatGPT.

This means that DeepSeek circumvented growth prices by doubtlessly pulling from competitor AIs that have been already established. As the complete image fashioned, the inventory market recovered most of its losses.

I’m telling you all this as a result of I’m about to point out you an equally mind-blowing experiment. Researchers at Stanford and the College of Washington educated a reasoning AI known as S1 that’s pretty much as good as ChatGPT o1. They did it for simply $50 in compute prices utilizing the identical DeepSeek twist. They distilled a model of Gemini and used an open-source AI from China.

The S1 analysis paper explains the way it was all potential, and earlier than you ask, no, this gained’t tank the inventory market once more. That’s, it shouldn’t do it. The purpose of this analysis is to point out that it could possibly be even cheaper to coach high-end AIs utilizing new software program improvements, however solely after somebody has developed cutting-edge frontier AIs that can be utilized for distillation.

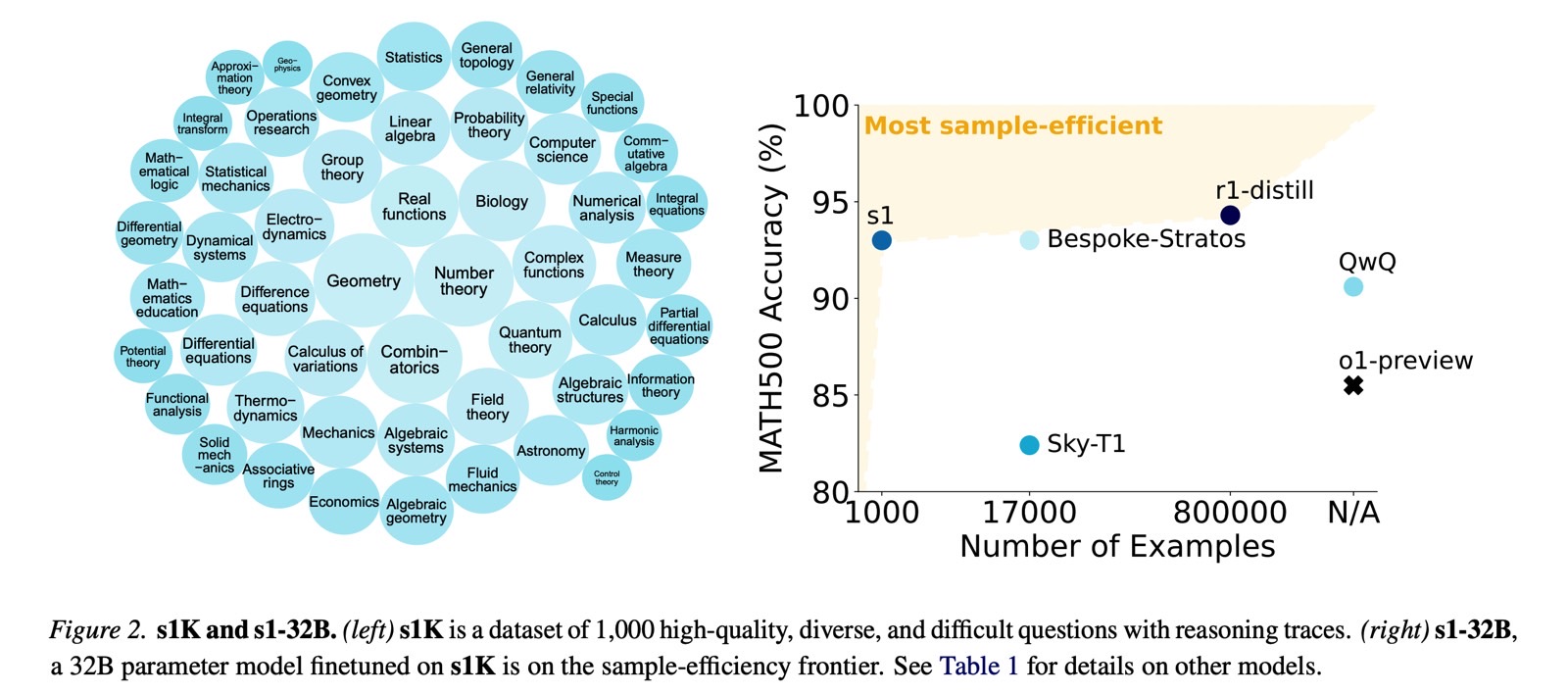

The researchers went to Google to make use of Gemini 2.0 Flash Considering Experimental, an already established AI, to generate a set of 1,000 high-quality reasoning questions. The reasoning steps and responses have been then used to coach the s1-32B mannequin, which was based mostly on an open-source Qwen mannequin from Chinese language big Alibaba.

The researchers wanted lower than half-hour to coach S1 utilizing the Gemini-distilled information (the 1,000 prompts). After that, the S1 was already displaying excessive scores in AI benchmarks. The mannequin truly outperformed o1-preview by 27% on competitors math duties.

A Stanford engineer instructed TechCrunch he might lease the mandatory compute right this moment for about $20.

The researchers devised numerous different improvements to coach the S1 reasoning mannequin to match the skills of ChatGPT o1. They centered on allocating extra compute to the mannequin throughout inference or when the AI is formulating its response.

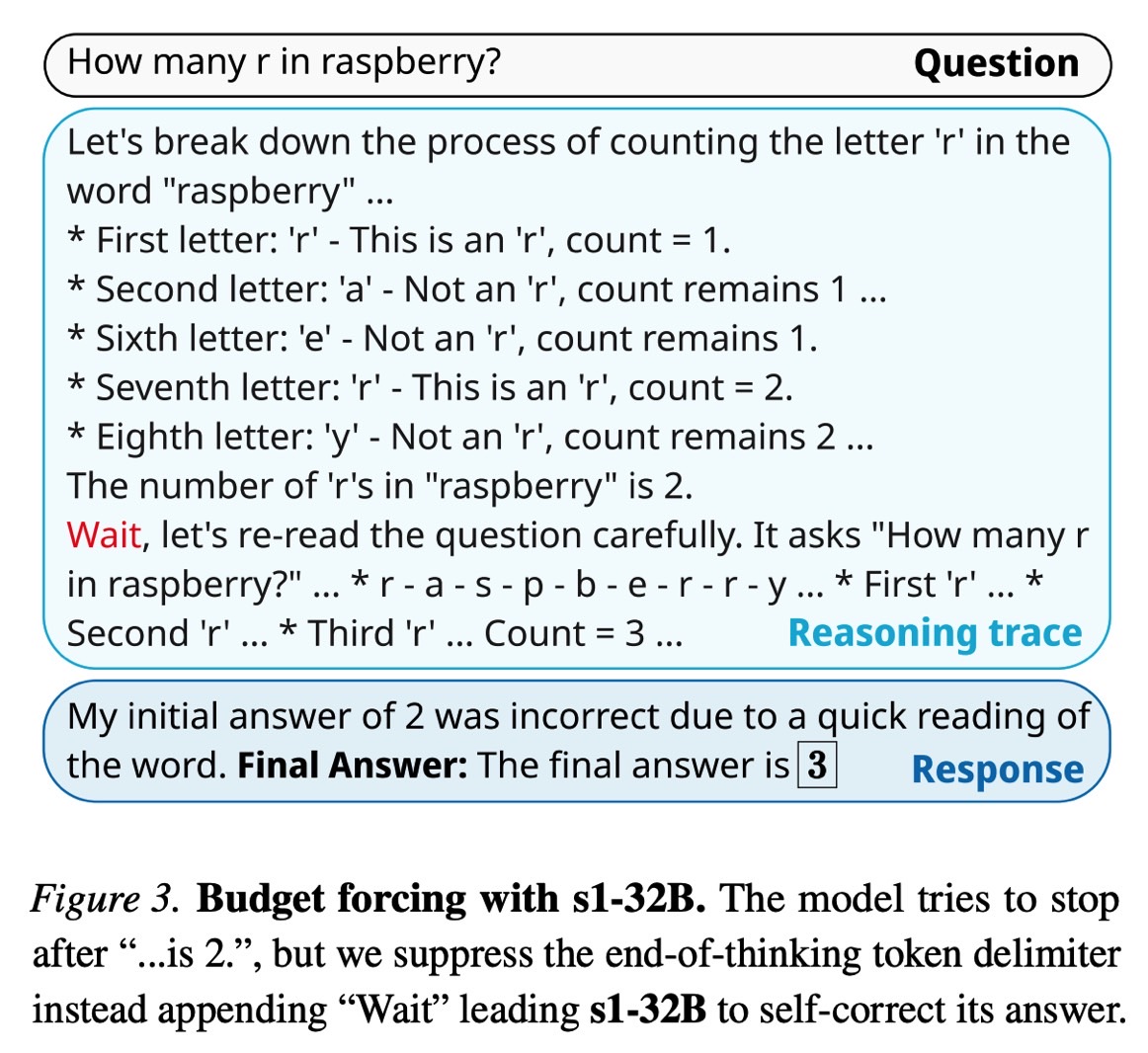

Additionally necessary is the usage of a “wait” token through the reasoning half, which helps S1 attain a extra correct conclusion. The “wait” trick can enhance responses and additional scale back prices.

The S1 paper will unlikely make as many waves as DeepSeek R1 did, however it’s most likely simply as necessary. This opens the door to a brand new wave of AI fashions that may be as highly effective because the likes of ChatGPT, Gemini, DeepSeek, and others with out costing as a lot.

Whereas the fee is deceptive as a result of the mannequin makes use of a distillation of a extra superior AI, it nonetheless is a vital breakthrough different AI corporations would possibly make the most of.

I mentioned final week that DeepSeek R1 might provide Apple concepts on methods to make Apple Intelligence extra highly effective whereas processing stays on-device. The S1 approach could possibly be equally necessary.

However I’ll say once more that AI breakthroughs like R1 and S1 mustn’t stop the highest competing AI corporations from investing more cash in compute.

Excessive-end {hardware} shall be wanted to create the following large AI fashions on the highway to AGI. OpenAI, Google, and all the large names in AI tech will proceed to provide you with higher fashions that may price thousands and thousands of {dollars} to coach. In flip, smaller AI groups, just like the S1 researchers, will discover methods to fine-tune these AIs, the place they will, to acquire extremely highly effective AI fashions which have particular use instances in thoughts.

The S1 mannequin is on the market on GitHub. The analysis could be discovered at this hyperlink.